Market Size

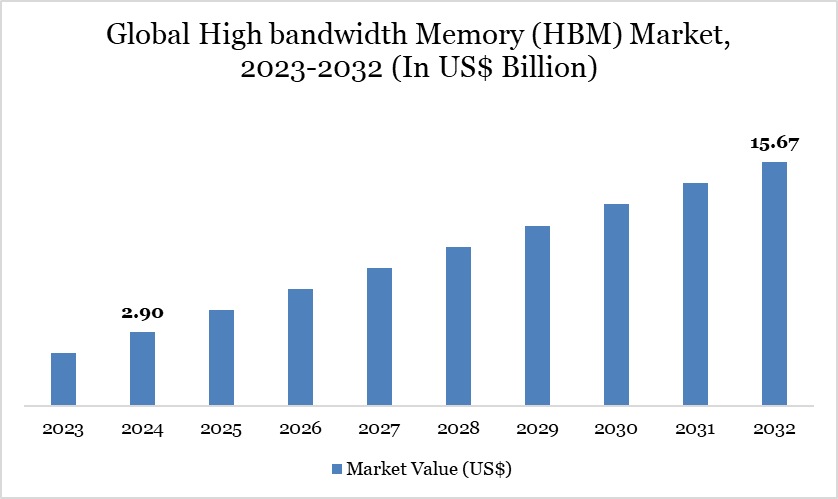

Global High bandwidth memory (HBM) Market reached US$ 2.90 billion in 2024 and is expected to reach US$ 15.67 billion by 2032, growing with a CAGR of 23.63% during the forecast period 2025-2032.

The HBM market is witnessing rapid growth as industries demand faster and more energy-efficient memory solutions to support advanced computing. With the increasing adoption of artificial intelligence (AI), high-performance computing (HPC), cloud data centers, and advanced graphics processing, the need for memory technologies that deliver higher bandwidth and lower latency has expanded significantly. Semiconductor companies are integrating HBM into processors, accelerators, and networking devices to handle massive data loads while improving efficiency and scalability.

Despite challenges such as high production costs, limited supply chain capacity, and complex manufacturing processes, advancements in 3D stacking, through-silicon vias (TSVs), and next-generation HBM3/3E technologies are driving opportunities. These innovations are enabling higher memory density, reduced power consumption, and superior computing performance, making HBM critical for future-ready architectures across multiple industries.

Global High bandwidth memory (HBM) Market Trend

One of the key trends shaping the HBM market is the increasing use of HBM in AI and machine learning workloads. Training large language models and deep learning systems requires enormous memory bandwidth, and HBM is becoming the preferred choice due to its ability to handle real-time data at high speed while minimizing power usage.

Another strong trend is the adoption of HBM in data centers and HPC environments. Cloud providers and research institutions are integrating HBM into CPUs, GPUs, and AI accelerators to support tasks such as big data analytics, scientific simulations, and immersive applications. In parallel, memory manufacturers are expanding investment in HBM3 and HBM3E development, which offer significantly higher bandwidth and capacity. Together, these trends highlight the market’s shift toward next-generation memory solutions, enabling faster innovation and supporting the growing demand for advanced digital transformation worldwide.

Market Scope

Metrics | Details |

By Type | HBM, HBM2, HBM2E, HBM3E |

By Memory Capacity | Up to 4GB, 4GB to 8GB, 8GB to 16GB, Above 16GB |

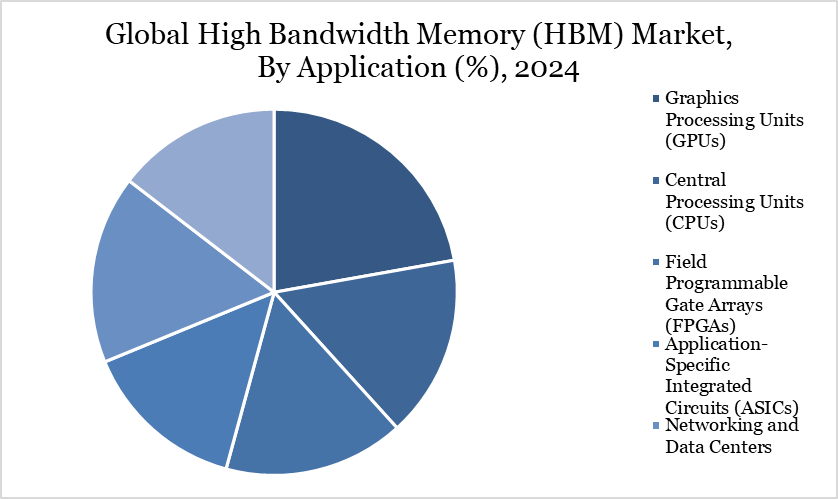

By Application | Graphics Processing Units (GPUs), Central Processing Units (CPUs), Field Programmable Gate Arrays (FPGAs), Application-Specific Integrated Circuits (ASICs), Networking and Data Centers, Others |

By End-Users | IT & Telecommunication, Consumer Electronics, Automotive, Healthcare, Defense & Aerospace, Others |

By Region | North America, South America, Europe, Asia-Pacific, Middle East and Africa |

Report Insights Covered | Competitive Landscape Analysis, Company Profile Analysis, Market Size, Share, Growth |

For More detailed information Request free sample

Market Dynamics

Rising Demand for AI and High-Performance Computing Driving HBM Adoption

The explosive growth of artificial intelligence (AI), high-performance computing (HPC), and advanced graphics is a key driver for the High Bandwidth Memory (HBM) market, as these applications require massive data throughput and low latency that conventional memory cannot deliver. Unlike traditional DRAM or GDDR, HBM provides superior bandwidth per watt, making it essential for training large language models, running scientific simulations, and supporting real-time analytics.

For instance, NVIDIA’s H100 GPU and AMD’s MI300 accelerator use HBM3 to deliver multi-terabyte-per-second memory bandwidth for AI and HPC workloads. Similarly, major cloud service providers such as AWS, Microsoft Azure, and Google Cloud are integrating HBM-enabled processors to meet rising enterprise demand for AI-driven services. As industries accelerate their digital transformation, the adoption of HBM across data centers, supercomputers, and AI platforms is becoming one of the strongest growth engines for the market.

High Production Costs and Supply Chain Constraints Limiting Market Growth

High production costs and supply chain challenges act as restraints for the HBM market, as the technology requires complex 3D stacking, through-silicon vias (TSVs), and advanced packaging. Unlike conventional DRAM, HBM manufacturing involves specialized processes that increase capital expenditure and restrict mass adoption.

For instance, Samsung Electronics, SK Hynix, and Micron Technology dominate the global HBM supply, leading to limited vendor options and potential pricing pressures. Shortages of advanced semiconductor packaging capacity further constrain availability, impacting GPU, AI accelerator, and HPC system launches. These challenges increase costs for OEMs, slow down deployment cycles, and restrict broader adoption of HBM in mid-range applications, thereby limiting the overall market growth potential.

Segment Analysis

The global high bandwidth memory (HBM) market is segmented based on type, memory capacity, application, end-users and region.

Graphics Processing Units (GPUs)as the Core Driver of Homologation Services Demand

Graphics Processing Units (GPUs) are a major driver of the High Bandwidth Memory (HBM) market, as they account for the largest share of HBM adoption globally. With rising demand for high-performance graphics, artificial intelligence (AI), and gaming, GPUs require extremely high bandwidth and low latency memory to handle complex workloads efficiently. For instance, modern GPUs from NVIDIA and AMD integrate HBM2E and HBM3 to deliver multi-terabyte-per-second bandwidth for advanced rendering, deep learning, and data-intensive tasks.

In the data center sector, cloud service providers such as AWS, Microsoft Azure, and Google Cloud are increasingly deploying GPU-based accelerators with HBM to power AI training, big data analytics, and HPC applications. Similarly, in gaming and visualization, the surge in demand for AR/VR, 3D modeling, and real-time rendering continues to boost GPU shipments equipped with HBM.

The growing popularity of GPUs in AI accelerators, autonomous vehicles, and enterprise HPC clusters has further strengthened their role as the largest application segment for HBM. Moreover, as global OEMs and semiconductor companies launch more powerful GPU architectures at shorter cycles, the need for faster and more efficient memory integration becomes critical. Overall, GPUs remain the core growth engine of the HBM market, driving both volume demand and next-generation technology adoption.

Geographical Penetration

Asia-Pacific: The Global Hub Driving HBM Market Growth

Asia-Pacific is driving the High Bandwidth Memory (HBM) market due to its position as the largest hub for semiconductor manufacturing and advanced computing adoption. Countries like China, South Korea, Japan, and Taiwan dominate global memory production, with leading players such as Samsung Electronics, SK Hynix, and Micron spearheading HBM development and supply. China, for instance, is rapidly expanding its semiconductor ecosystem under state-backed initiatives, boosting demand for HBM in AI, HPC, and cloud infrastructure.

Japan is advancing in high-performance computing with projects like the Fugaku supercomputer, which require cutting-edge memory technologies. South Korea continues to lead in large-scale memory fabrication and packaging, while Taiwan’s foundries, such as TSMC, are critical for integrating HBM with GPUs, CPUs, and AI accelerators. India is also emerging as a growth market, with rising demand for AI-driven applications, gaming, and cloud services.

The region’s rapid expansion of data centers, AI research, and 5G/IoT ecosystems further accelerates HBM adoption. Additionally, the growing export of semiconductors and advanced computing devices from Asia-Pacific to North America and Europe strengthens its role as a global supply hub. As a result, Asia-Pacific remains the central growth engine for the HBM market, driven by production scale, technological leadership, and rising digital transformation.

Technological Analysis

Technological advancements are reshaping High Bandwidth Memory (HBM) by improving performance, scalability, and efficiency for next-generation computing. Innovations in 3D stacking and through-silicon via (TSV) technology enable higher memory density and faster communication between layers, reducing latency while maintaining a compact footprint. The development of HBM2E, HBM3, and HBM3E is pushing bandwidth into multi-terabyte-per-second ranges, supporting demanding workloads in AI, HPC, and cloud data centers.

Artificial Intelligence (AI) and Machine Learning (ML) are increasingly leveraged in memory design and integration, optimizing chip architecture, workload management, and energy efficiency. Advanced packaging technologies, such as chiplets and heterogeneous integration, allow HBM to be paired more effectively with CPUs, GPUs, and AI accelerators, maximizing system performance. Meanwhile, innovations in low-power design and thermal management are addressing energy consumption challenges in large-scale data centers.

In addition, the use of digital twins and simulation-driven design is accelerating memory development cycles, reducing reliance on physical prototypes. Together, these technological improvements are enabling HBM to become the foundation of next-generation computing architectures, supporting faster data processing, lower power consumption, and broader adoption across industries.

Competitive Landscape

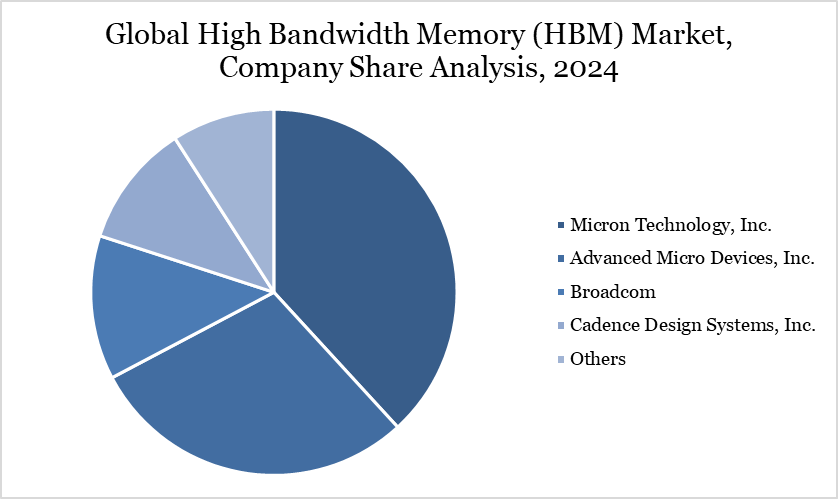

The major global players in the market include Micron Technology, Inc., Advanced Micro Devices, Inc., Broadcom, Cadence Design Systems, Inc., Marvell, Huawei Technologies, Infineon Technologies AG, SK HYNIX INC., RM Holdings PLC ADR, Intel Corporation and among others.

Key Developments

In 2025, Huawei Technologies is set to unveil a technological breakthrough aimed at reducing China’s reliance on high-bandwidth memory (HBM) chips for running artificial intelligence reasoning models, according to state-run Securities Times.

In 2025, Micron Technology, Inc. announced the integration of its HBM3E 36GB 12-high offering into the upcoming AMD Instinct MI350 Series solutions. The collaboration highlighted the critical role of power efficiency and performance in training large AI models, delivering high-throughput inference, and handling complex HPC workloads such as data processing and computational modeling. It also marked another significant milestone in HBM industry leadership for Micron, showcasing its strong execution and the value of its customer relationships.

Why Choose DataM?

Data-Driven Insights: Dive into detailed analyses with granular insights such as pricing, market shares and value chain evaluations, enriched by interviews with industry leaders and disruptors.

Post-Purchase Support and Expert Analyst Consultations: As a valued client, gain direct access to our expert analysts for personalized advice and strategic guidance, tailored to your specific needs and challenges.

White Papers and Case Studies: Benefit quarterly from our in-depth studies related to your purchased titles, tailored to refine your operational and marketing strategies for maximum impact.

Annual Updates on Purchased Reports: As an existing customer, enjoy the privilege of annual updates to your reports, ensuring you stay abreast of the latest market insights and technological advancements. Terms and conditions apply.

Specialized Focus on Emerging Markets: DataM differentiates itself by delivering in-depth, specialized insights specifically for emerging markets, rather than offering generalized geographic overviews. This approach equips our clients with a nuanced understanding and actionable intelligence that are essential for navigating and succeeding in high-growth regions.

Value of DataM Reports: Our reports offer specialized insights tailored to the latest trends and specific business inquiries. This personalized approach provides a deeper, strategic perspective, ensuring you receive the precise information necessary to make informed decisions. These insights complement and go beyond what is typically available in generic databases.

Target Audience 2024

Manufacturers/ Buyers

Industry Investors/Investment Bankers

Research Professionals

Emerging Companies